Dynamic Analysis with Text-to-Python

Generating and Executing Python Code On-the-Fly from Natural Language

In the previous chapter, we explored how Text-to-SQL translates natural language into database queries. This is powerful for data retrieval, but what if you need to perform complex analysis, custom visualizations, or data transformations beyond SQL's capabilities?

This is where Text-to-Python comes in.

Instead of generating a SQL query, a Text-to-Python system generates an entire Python script to perform the requested analysis. This unlocks a new level of flexibility, allowing you to leverage the full power of the Python data science ecosystem—pandas, Matplotlib, scikit-learn, and more—all from a simple, natural language prompt.

This chapter guides you through the concepts, architecture, and practical steps to build a dashboard that generates and executes Python code in real-time.

Why Text-to-Python? The Next Frontier of BI

Traditional analysis methods present a trade-off:

- No-Code Tools (e.g., Tableau, Power BI): Easy to use, but limited to vendor-provided features and chart types. Custom logic is difficult or impossible.

- Programming (e.g., Jupyter Notebooks): Infinitely flexible, but requires specialized skills and is too slow for the rapid back-and-forth of business meetings.

Text-to-Python offers the best of both worlds: the flexibility of code with the ease of use of natural language.

Text-to-SQL vs. Text-to-Python

| Feature | Text-to-SQL | Text-to-Python |

|---|---|---|

| Core Task | Data Retrieval | Data Analysis & Visualization |

| Output | A SQL SELECT statement. | A complete Python script. |

| Flexibility | Limited to SQL's capabilities. | Can perform complex transformations, statistical analysis, and create custom charts. |

| Best For | Answering "what" questions (e.g., "What were last month's sales?"). | Answering "why" and "how" questions (e.g., "Why did sales decline? Show the correlation with marketing spend."). |

By integrating Text-to-Python, you can build a BI tool where a user can ask, "Show the year-over-year sales growth, adjusted for last year's marketing campaign," and the system will instantly generate and run the Python code to perform that multi-step analysis.

How Text-to-Python Works: An Architectural Overview

A Text-to-Python system consists of three core components: a User Interface, an LLM for code generation, and a secure Sandbox for code execution.

- Code Generation: The user's request and data context (e.g., file path, schema) are sent to an LLM, which generates a Python script for the analysis.

- Secure Code Execution: The generated Python code is executed in a secure, isolated sandbox environment. This is the most critical part of the architecture.

The Importance of a Sandbox

Executing LLM-generated code poses significant security risks. A malicious or poorly-formed prompt could trick the LLM into generating code that attempts to:

- Access sensitive files on the host system.

- Execute harmful system commands (

rm -rf /). - Leak private data or environment variables.

- Consume excessive system resources, causing a denial of service.

Standard Python functions like exec() and eval() offer no protection against these threats. Never execute LLM-generated code directly on your server or local machine.

A sandbox is an isolated, containerized environment for secure code execution. It has no access to the host system's filesystem, network, or environment variables, ensuring that even malicious code cannot cause harm.

Major Sandbox Services

Several cloud services provide secure sandboxes for AI applications.

- E2B: A cloud runtime environment designed for AI agents and code interpretation, offering fast startup times and a simple API.

- Daytona: A platform for managing standardized development environments.

- Modal: A general-purpose cloud computing platform for running containers, functions, and applications.

This chapter uses E2B for its focus on AI applications and ease of use.

Building a Text-to-Python App with Streamlit and E2B

Let's build a simple Text-to-Python application to analyze an uploaded CSV file.

Prerequisites

- E2B API Key: Obtain from the E2B Dashboard.

- OpenAI API Key: Obtain from the OpenAI Platform.

- Environment Variables: Store your keys in a

.envfile.E2B_API_KEY="e2b_..." OPENAI_API_KEY="sk-..." - Dataset: Download a sample dataset (e.g., the movies dataset from the example code) and place it in your project directory.

Designing the Prompt

A well-structured prompt is crucial. It must provide the LLM with a clear role, context, and constraints.

def create_prompt(user_input: str, file_path: str) -> str:

prompt = f"""

You are an expert data analyst. Your task is to generate a Python script to analyze a dataset.

### Context

- The user wants to analyze a CSV file located in the sandbox at: `{file_path}`.

- The script will be executed in a secure sandbox environment.

- The output must be a PNG image file saved to `/home/user/output.png`.

### Dataset Schema

The CSV file has the following columns:

- 'id': number, movie ID

- 'original_language': string (e.g., "en", "es")

- 'original_title': string, movie title

- 'popularity': float, not normalized

- 'release_date': string, 'YYYY-MM-DD' format

- 'vote_average': float, 0-10

- 'vote_count': integer

### User's Request

{user_input}

### Your Task

Generate a single, complete Python script that:

1. Loads the data from the CSV file.

2. Performs the analysis requested by the user.

3. Generates a single chart (using Matplotlib or Seaborn) visualizing the result.

4. Saves the chart as a PNG file to `/home/user/output.png`.

5. The script must be non-interactive and self-contained.

"""

return promptThis prompt clearly defines the goal, data structure, and required output format (a PNG image).

Implementation Steps

The full application code is at the end of this section. Here is a breakdown of the key functions.

1. main(): The Streamlit UI

This function sets up the Streamlit interface, including a text area for the user's question and a submit button.

2. generate_code(): Calling the LLM

This function takes the user's input, creates the prompt, and calls the OpenAI API to generate the Python code.

3. run_code_in_sandbox(): Secure Execution

This is the core of the application. It takes the generated Python code and executes it inside an E2B sandbox.

from e2b import Sandbox

def run_code_in_sandbox(python_code: str):

# The E2B sandbox is automatically configured from your API key

with Sandbox() as sandbox:

# 1. Upload the dataset to the sandbox

with open("dataset.csv", "rb") as f:

sandbox.files.write("dataset.csv", f)

# 2. Run the generated code

exec_result = sandbox.notebook.exec_cell(python_code)

# 3. Check for errors

if exec_result.error:

st.error(f"Execution Error: {exec_result.error.name}")

st.code(exec_result.error.traceback)

return None

# 4. Download the resulting image

try:

image_bytes = sandbox.files.read_bytes("/home/user/output.png")

return image_bytes

except FileNotFoundError:

st.error("The script did not generate an output image.")

return NoneThis function handles the entire lifecycle: creating the sandbox, uploading data, running the code, and retrieving the result, all while isolating the execution from the host system.

Full Application Code

(The full, runnable code for app.py is omitted for brevity.)

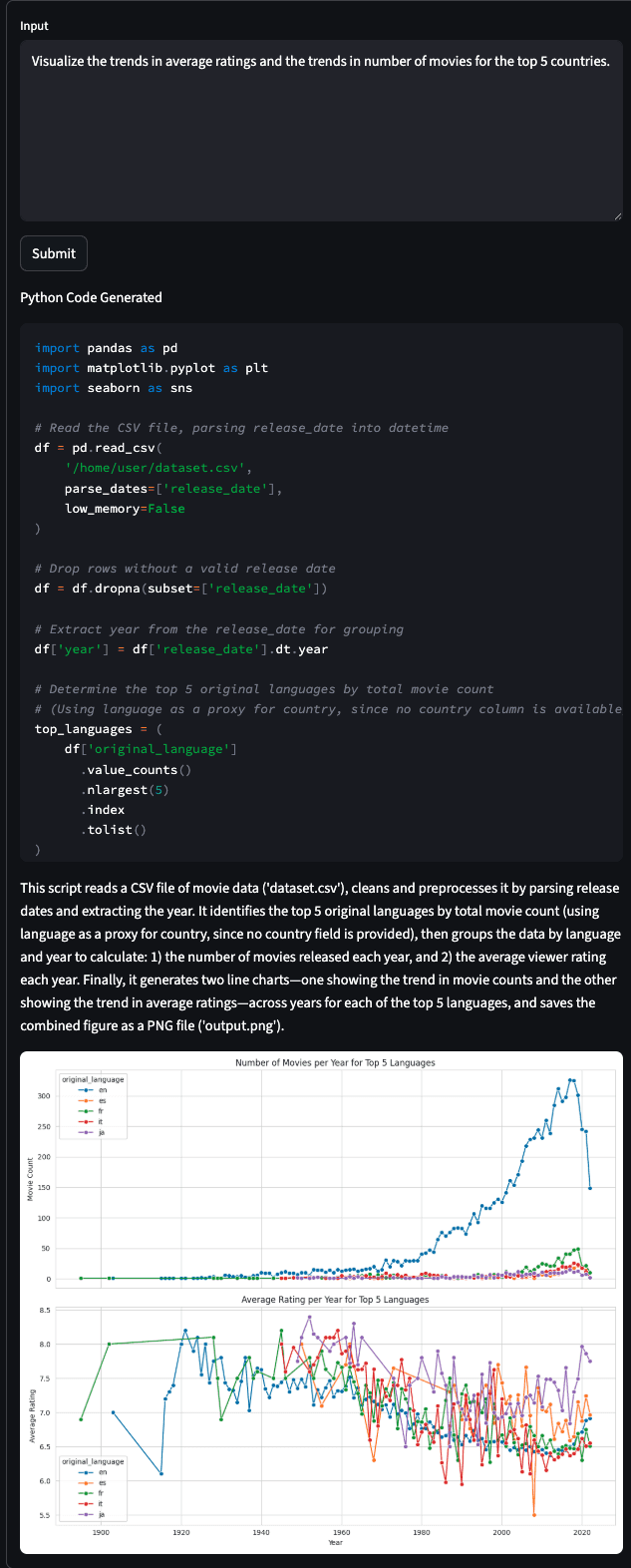

When the app is run, the user can ask a question, the AI generates and executes the code, and the resulting chart is displayed in the Streamlit interface.

Advanced Applications

The basic Text-to-Python system can be extended to create more powerful analysis tools.

- Interactive Chat Interface: Build a conversational agent that remembers the analysis context, allowing for follow-up questions like, "Now break it down by region," or "Change that to a pie chart."

- Dynamic Package Installation: Instruct the sandbox to install necessary Python libraries (

pandas,seaborn,scikit-learn) on the fly before executing the code. - Multi-Modal Analysis: Design the system to work with more than just CSV files, such as connecting to databases, calling external APIs, or analyzing uploaded images.

- Automated Code Correction: If the generated code fails, create a loop where the error message is sent back to the LLM, which then attempts to debug and correct its own code before re-executing.

A sample application integrating these advanced features is available at: GitHub Repository

Summary

Text-to-Python represents a paradigm shift in data analysis, moving from rigid, pre-defined dashboards to dynamic, conversational interfaces. It empowers users to perform complex, multi-step analyses using the full capabilities of the Python ecosystem.

Key Takeaways:

- Beyond SQL: Text-to-Python handles complex transformations, statistics, and custom visualizations that are impossible with SQL alone.

- Security is Non-Negotiable: Never execute LLM-generated code without a secure sandbox. Services like E2B are essential for building safe applications.

- Prompting is Everything: A well-designed prompt with clear context and constraints is key to generating accurate and reliable code.

- The Future is Conversational: The true power of this technology lies in building interactive, chat-based agents that can reason, debug, and collaborate with users on complex analytical tasks.

In the final chapter, we will discuss how to deploy, operate, and continuously improve the BI dashboards you have learned to build.

Get your first dashboard in 10 minutes

Free to get started · No credit card required

Start talking to your data