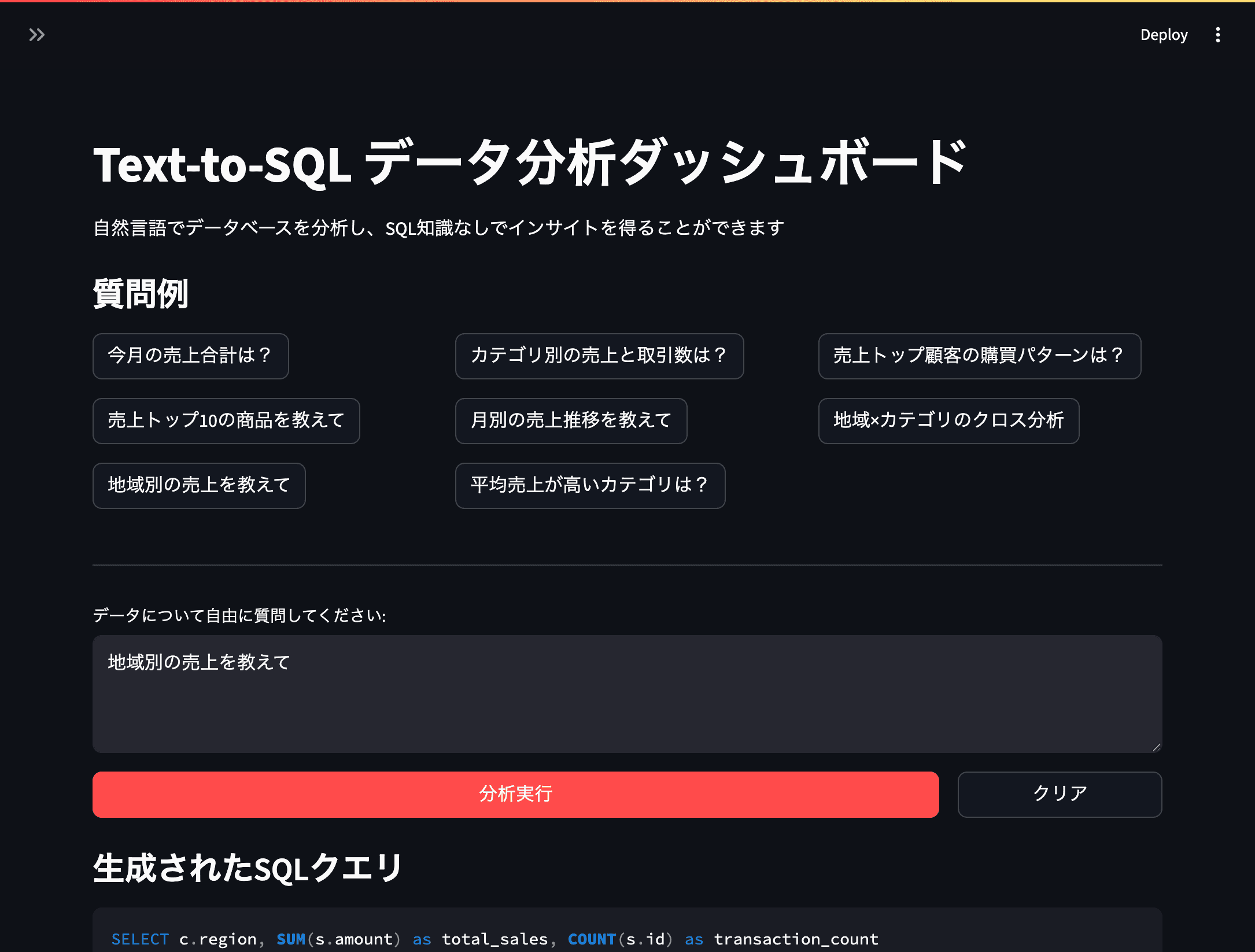

Building On-Demand Dashboards with Text-to-SQL

Generate SQL Queries from Natural Language to Empower Self-Service Analytics

In traditional data analysis, the ability to write SQL is often a bottleneck. Crafting queries with complex joins and aggregations requires specialized knowledge, creating a barrier between business users and the data they need.

Text-to-SQL technology addresses this by allowing anyone to ask questions of their data in plain English. A question like, "Compare last month's regional sales with the same period last year," can be automatically converted into a precise SQL query, with the results visualized instantly.

This chapter provides a conceptual and practical guide to building an on-demand data analysis dashboard using Text-to-SQL.

The Value of On-Demand Analysis

Beyond Static Dashboards

While effective for monitoring known KPIs, traditional BI tools like Tableau and Power BI struggle with ad-hoc questions. Answering a new question often requires a time-consuming process involving an analyst.

Text-to-SQL breaks this cycle.

| Constraint | Traditional BI Tools | Text-to-SQL Dashboards | |---| | Flexibility | Limited to pre-defined metrics. | Responds to any question in real-time. | | Speed to Insight | Hours or days for new questions. | Seconds. | | Accessibility | Requires training to build reports. | Usable immediately with natural language. | | Cost | High per-user license fees. | Pay-as-you-go for LLM API usage. |

A New Way to Explore Data

Text-to-SQL democratizes data access, empowering business users to conduct their own exploratory analysis without waiting for an expert. It transforms the dashboard from a static report into a dynamic, conversational analysis tool.

When to Use Text-to-SQL

Text-to-SQL is a powerful addition to the analyst's toolkit, not a replacement for all other tools.

- Use Text-to-SQL for: Ad-hoc analysis, answering unexpected questions, and enabling self-service for business users.

- Use Static Dashboards for: Routine monitoring of core business KPIs (e.g., daily sales).

- Use Jupyter/Python for: Deep, complex analysis, such as building machine learning models or performing advanced statistical tests.

How Text-to-SQL Works

The process involves two main steps:

- AI-Powered Translation: The user's natural language question is sent to a Large Language Model (LLM) along with the database schema. The LLM uses the schema to understand the table structure and relationships, then translates the user's intent into a SQL query.

- Execution and Visualization: The generated SQL query is executed against the database. The results are returned as a pandas DataFrame and can be displayed as a table or chart.

Designing an Effective Text-to-SQL Prompt

The accuracy of the generated SQL depends heavily on the prompt quality. A well-designed prompt guides the AI to produce the correct query while adhering to important constraints.

Here is a robust, reusable template for a Text-to-SQL prompt.

def create_text_to_sql_prompt(question: str, schema_info: str) -> str:

prompt = f"""

You are an expert SQL analyst. Your task is to generate a single, syntactically correct SQL query

based on the user's question and the provided database schema.

### Database Schema

{schema_info}

### User's Question

{question}

### Important Rules

1. **Only generate `SELECT` statements.** Never generate `UPDATE`, `DELETE`, `DROP`, or other modifying statements.

2. The query must be for a SQLite database.

3. Always limit the results to a maximum of 1,000 rows using `LIMIT 1000`.

4. Return ONLY the SQL query, with no additional explanation, text, or markdown.

"""

return promptThis prompt establishes three key elements:

- Role: "You are an expert SQL analyst."

- Context: The database schema and the user's question.

- Constraints: Safety rules and output format.

Providing a Detailed Database Schema

The more context the LLM has about your database, the better its queries will be. It is a best practice to programmatically fetch the schema and pass it to the LLM.

def get_database_schema(db_connection) -> str:

"""Fetches the schema of all tables and formats it for the LLM."""

query = "SELECT name, sql FROM sqlite_master WHERE type='table';"

schema_df = pd.read_sql(query, db_connection)

schema_text = "Here are the `CREATE TABLE` statements for the database:\n\n"

for _, row in schema_df.iterrows():

schema_text += row['sql'] + "\n\n"

return schema_text

# Example of schema_info text passed to the prompt:

"""

Here are the `CREATE TABLE` statements for the database:

CREATE TABLE sales (

id INTEGER,

customer_id INTEGER,

product_id INTEGER,

amount REAL,

quantity INTEGER,

sale_date TEXT

)

CREATE TABLE customers (

id INTEGER,

name TEXT,

region TEXT,

registration_date TEXT

)

...

"""Providing CREATE TABLE statements gives the LLM rich information about column names, data types, and relationships, leading to more accurate queries.

Building a Text-to-SQL App with Streamlit

Let's build a functional Text-to-SQL application. The full code is provided at the end of this section.

To run the final app:

pip install streamlit pandas openai

streamlit run app.pyStep 1: Building the User Interface

The UI consists of a sidebar for settings (e.g., API key) and a main area for user questions. We use st.text_area for input and st.button to trigger the analysis.

Step 2: Generating the SQL Query

When the user clicks "Run Analysis," their question and the database schema are sent to the LLM using our create_text_to_sql_prompt function.

if st.button("Run Analysis"):

if user_question:

schema_info = get_database_schema(conn)

prompt = create_text_to_sql_prompt(user_question, schema_info)

sql_query = generate_sql_with_api(prompt, api_key)

st.code(sql_query, language="sql")

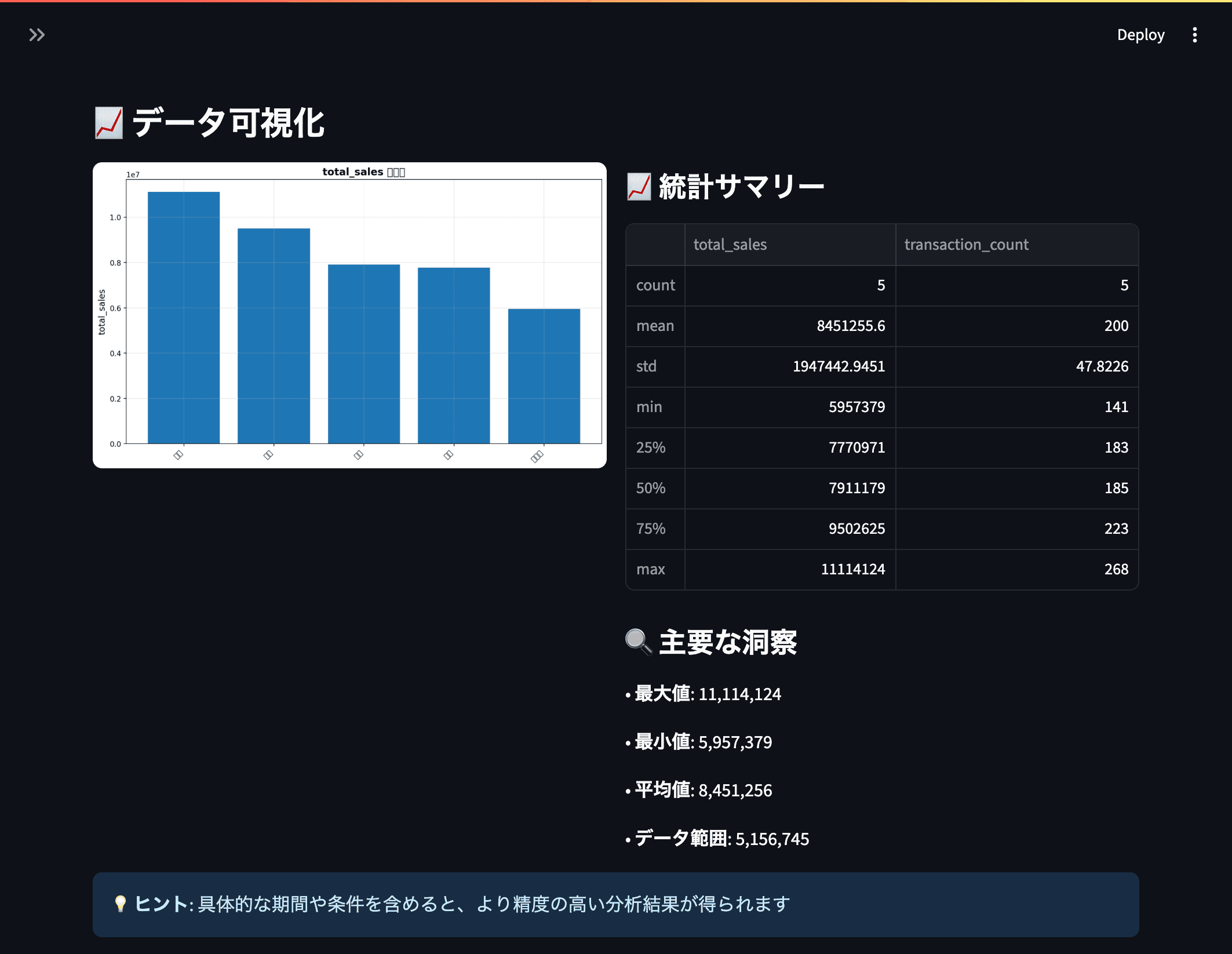

# ... execute and display ...Step 3: Executing the Query and Displaying Results

Before executing the generated SQL, a security check is critical. The is_safe_sql function ensures the query is a SELECT statement and does not contain dangerous keywords.

def is_safe_sql(sql: str) -> bool:

"""A basic security check for the SQL query."""

sql_upper = sql.upper().strip()

if not sql_upper.startswith('SELECT'):

return False

dangerous_keywords = ['DELETE', 'DROP', 'INSERT', 'UPDATE', 'CREATE', 'ALTER']

if any(keyword in sql_upper for keyword in dangerous_keywords):

return False

return True

# After generating the SQL...

if is_safe_sql(sql_query):

df = execute_sql_query(sql_query, conn)

st.dataframe(df)

if len(df.columns) > 1:

st.bar_chart(df.set_index(df.columns[0]))

else:

st.error("The generated SQL query is not safe to execute.")This creates a complete loop: the user asks a question, the AI translates it to safe SQL, the query is run, and the results are displayed.

Full Implementation Example

(The full, runnable code for app.py is omitted for brevity but follows the structure outlined above.)

# The full app.py code as provided in the original file would go here.

# This includes main(), create_sample_database(), render_analysis_interface(), etc.

# ...Advanced Text-to-SQL: Dynamic Visualization

The application can be enhanced by adding a layer of intelligence to the visualization. Instead of a generic bar chart, you can ask the LLM to suggest the best chart type for the given query and data.

Example Prompt Enhancement:

...

After generating the SQL query, please also suggest the most appropriate chart type

from this list: ["bar", "line", "pie", "scatter"].

Return the result as a JSON object: {"sql": "...", "chart_type": "..."}This allows the app to not only answer the user's question but also present the answer in the most effective visual format.

Summary

Text-to-SQL technology democratizes data access, complementing traditional BI dashboards by providing a flexible, intuitive way to handle ad-hoc analysis. Building a Text-to-SQL application with Streamlit can empower anyone in your organization to have a direct conversation with your data.

Key Takeaways:

- Empowerment through Language: Text-to-SQL removes the SQL barrier, allowing users to ask complex questions in plain English.

- Prompting is Key: System performance hinges on well-crafted prompts that provide clear roles, context (schema), and constraints.

- Safety First: Always validate and sanitize generated SQL before execution to prevent security risks.

- The Right Tool for the Job: Use Text-to-SQL for exploration and ad-hoc analysis, and traditional dashboards for monitoring established KPIs.

In the next chapter, we will explore a related and even more powerful concept: Text-to-Python, generating and executing entire data analysis scripts on the fly.

Get your first dashboard in 10 minutes

Free to get started · No credit card required

Start talking to your data